Subtotal: $

Checkout-

John Wayne, The Quiet Man

-

No Promises

-

Word Is Bond

-

Bring Back Hippocrates

-

The Dance of Devotion

-

Victor Hugo’s Masterpiece of Impossibility

-

A Vow Will Keep You

-

A Broken but Faithful Marriage

-

Can Love Take Sides?

-

A Defense of Vows

-

Why I Chose Poverty

-

Demystifying Chastity

-

The Adventure of Obedience

-

Vows of Baptism

-

Hutterite Ten Points of Baptism

-

The One Who Promises

-

Vows in Brief

-

The Raceless Gospel

-

Poem: “Blessing the Bells”

-

Poem: “Autumn in Chrysalis-Time”

-

Tiny Knights

-

Editors’ Picks: What Your Food Ate

-

Editors’ Picks: Untrustworthy

-

Editors’ Picks: The Last White Man

-

Retooling the Plough

-

Charting the Future of Pro-Life

-

Letters from Readers

-

Home in My Heart

-

Remembering Alice von Hildebrand

-

Sadhu Sundar Singh

-

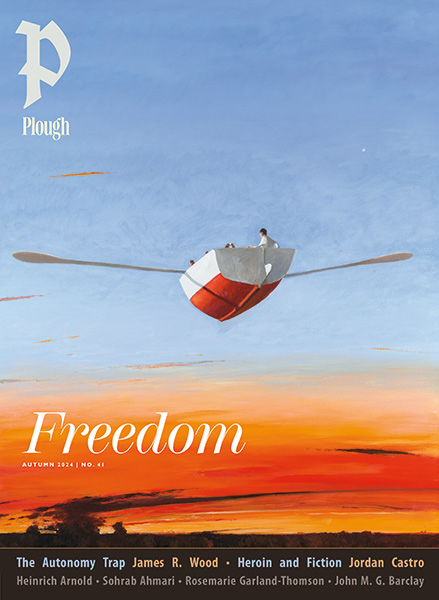

Covering the Cover: The Vows That Bind

The Day No One Would Say the Nazis Were Bad

Some say relativism is on its way out on college campuses, giving way to partisan passions. This ethics professor finds it alive and well.

By Mary Townsend

August 12, 2022

Next Article:

Explore Other Articles:

When the Nazis rolled up to Charlottesville in August 2017, it was clear they posed a peril to many things, and not the least to language. For the last seventy-odd years, since the German Nazis as such were defeated in the European theater, the statement “the Nazis are in town” or “he is such a Nazi” had one meaning, and that meaning was strictly metaphorical. The point of such analogies was to remind us to never be Nazis, in any sense you would care to name. But in the last five years, as further instances of current Nazism have piled up, from swastikas in dorms, to violence against Jews in Brooklyn, to White supremacists inside the Capitol building, language has rolled around in shock at the attempt to pronounce “he is a Nazi” and mean it – without fighting the need to add the gasping and inadequate signifier “literally.” The athleticism of this diction shows it still can’t be said without a certain disbelief. This disbelief announces peril.

The college classroom remains one of the most useful laboratories for the investigation of moral language: and the reason for this is that I, the professor, am not the one with final expertise. My students are, beautifully, very often the better judges on what phrase really reaches ethical velocity for American society’s language at large. There’s one well-known instance, however, where their subtlety can fall short. It used to be that when the name of Hitler showed up in classroom conversation, I would expect serious thought to fly out the window for twenty minutes at least. Usually the example would arrive in the service of proving beyond a shadow of a doubt that something is bad. The students would bring the name up with a certain modest pride, glowing in advance of the praise they assumed they would inevitably receive for taking a principled stand. This used to drive me nuts.

Then one day about six years ago, the opposite happened. I was teaching another philosophy course on Ethics, which I’ve now taught regularly for the last decade. We’d made it to the fifth section of John Stuart Mill’s Utilitarianism (1864), where he attempts to explain away the nature of justice as nothing more than general utility, the expediency of the greatest number; only math will be the judge, no emotions allowed. To get the students riled up a bit and start some discussion, I was trying to get them to think about the phenomenon of righteous indignation, namely, the anger we feel at even the most trivial unfair thing happening – that is, at any rate, when the bad thing happens to happen to us. Mill thinks such anger is animalistic, in the reductive sense of “animal,” and ultimately not helpful of itself for understanding or prosecuting justice in any of its varied forms.

Charlottesville “Unite the Right” Rally, 2017: Alt-right members preparing to enter Emancipation Park holding Nazi, Confederate, and Gadsden “Don't Tread on Me” flags. Photo by Anthony Crider

Now, usually, Mill’s reductive account of anger ought to be enough to raise our ire; who wants to be an animal, reductively speaking? Our response to him naturally awakes the human quality of our reason-loving spiritedness – the psychological je ne sais quoi that Plato, thinking of the Homeric heroes, resurrects out of Homeric language as a crucial principle of our rationally loving and other-protecting selves. Pedagogically speaking, at the beginning of an Ethics semester it’s been useful for me to push this and other extreme aspects of Mill’s argument in full, in the hopes that dissatisfaction with his account will set up a more Aristotelian middle ground later on, where the fairly obvious trick to anger is to get it at the right amount in the right place in the right time.

But this time five years ago, I got stuck in the middle of my argument. Usually I get a pretty quick agreement that a) some things are bad and b) we do get angry about them. This time, nobody would admit that anything was bad; and thinking maybe it must be a sleepy sort of group, and laughing to myself for breaking my own rule, I brought up the Nazis, as a sort of joke, since after all some things are bad, hyperbole regardless.

I couldn’t get anyone to agree that the Nazis were bad. In fact the students completed the argument quite elegantly for me: we each have our own perspective; the Nazis aren’t strictly speaking “bad” because after all, from their perspective they thought they were good; there is no way we can ourselves claim to truthfully state from our perspective that they are bad; Q.E.D. there’s nothing bad about the Nazis.

I was unprepared for this; I walked around in a daze for at least a week. I went to high school in the late 1990s, where the last of the slow-drip of congealed, apparent prosperity before September 11, 2001 meant that, on the surface of the local newsprint at least, the biggest thing in politics going was whether or not Bill Clinton could be caught out in an embarrassing if fairly trivial lie. It was the sort of time where someone could argue that history was over, that Western liberal democracy had won conclusively, and you’d believe him. By tenth grade we had studied The Diary of Anne Frank (1947) no fewer than three times. In my youthful memories the Nazis were so bad, they had attained the boring kind of evil. When cast as villains of a piece, they couldn’t make a splash, they were so obviously over and done with; there could be no dramatic tension in their defeat.

Something had apparently shifted for my students. They clearly expected me to praise them for getting what I must see was the right answer: even the Nazis aren’t bad. The uniformity of this particular class’s reaction was singular and uncanny. I’m used to the attempt to subvert more or less hollow moral outrage; but what do you do when there’s none at all?

Relativism is one of the oldest arguments in the book: Herodotus tells the story about the Persian king Darius, who in a moment of leisure brought in some people from a town where it was customary to eat their dead, and then also some Greeks who for piety’s sake would burn them. Both were equally horrified at the other, and Herodotus takes the opportunity to quote Pindar with perhaps a little smugness: “Custom,” he says, “is king.”

And so I had to laugh when I ran across the claims that relativism is on the way out in colleges across the land, following “the buggy whip” in obsolescence, in favor of some variety of so-called woke passion. In truth, relativism is a fundamentally attractive position, particularly at the moment one’s youthful assumptions are first overturned. The ethical world is full of reversals and fun-house-mirror upheavals, as Aristotle is quick to point out at the beginning of his own series of lectures to the youth on the subject of ethics. The same thing can save you or destroy you (Aristotle’s examples are courage and wealth). It makes sense that the soul’s initial response to the vertigo felt at this realization would be to assume that vertigo is universal, and without remedy.

My experience of college students is very different from the one widely portrayed in the media – the vocal “social justice warriors” ready to call out the slightest departure from the latest trend in academic morality. The fact that this is the dominant image of the college student says more about who has the leisure to complain about kids these days than it does about the real demographics of the American college population, where the vast majority go to state schools and community college. I’ve taught ethical philosophy to students from a variety of economic backgrounds, ranging from experiences at universities that cater to the very wealthy, to colleges meant for various versions of the middle class, to early college programs for high school students who would be the first in their family to go to college, before finally landing in the last four years at St. John’s University, which is one of the most diverse schools in America, and one of the most successful at helping its students to move up the economic ladder.

My current students do not have time for finger-pointing and gotchas; they are much too busy trying not to spend too much money on college before they can get a job – a better job, they hope, than the job or jobs they currently work at to pay for college. This necessitates a practical bent to their thinking (some of my most thoughtful students are pharmacists), and unlike many of my former students, rather wealthier and, on the books, better “prepared” for college work, I know I can count on them each week not to strand me in another classroom full of youthful humans ready to reason away the crimes of the Nazis, or indeed, of humanity at large.

I couldn’t get anyone to agree that the Nazis were bad. In fact the students completed the argument quite elegantly for me: we each have our own perspective; the Nazis aren’t strictly speaking “bad” because after all, from their perspective they thought they were good.

But among the range of classrooms I’ve worked with, across class lines, what I’ve observed is that for the majority of students, of whatever background, the goal of going to college is still nevertheless to get a job, to the exclusion of all other goals. It’s not primarily a place to discover what you think is good or bad or take a stand. It’s a neutral task to complete; and holding on to that neutrality, often in a kind of stoic desperation, is how you make it through. Thus the human phenomenon of relativism, as a strategy for moral and emotional neutrality, remains in its various forms a practical part of many students’ education, as the ground of a necessary stoicism that allows them a safe distance from the harrowing things they experience in and out of the classroom, where one day they might be asked to comment on photos they must view of atrocities from various global conflicts, and the next they might not have enough money to eat.

In the tension of this atmosphere, inevitably some souls, of whatever economic background, will flip from the relativism of “nothing is bad” to the absolutism that “everything is bad.” As Socrates argues in Plato’s Republic, extremes have a way of giving birth to each other, and there’s always going to be something fundamentally surprising to see this strange reversal happen. But it’s this psychological principle, it seems to me, that underlies the extreme forms of moral passion that dominate the news – it’s one thing to attempt to stop a despicable talk from happening, another to actually send the speaker to the hospital. But again, this extremity is rare: on the whole, the urgent need to buckle down and make the grade creates an atmosphere where in pursuit of the comfortable life they envision, it is simply easier to argue that there are “fine people on both sides” (as the Charlottesville Nazis were described by someone better known for asset-building than for moral analysis).

While those most alarmed by campus culture will usually confine their observations to one psychological tendency amongst the elite minority, the problem remains that either absolutism or relativism among elites will have outsized consequences for everyone else. If anything is justified for your own good from your own perspective, it’s too easy for a wolves of wall street situation to ensue, to even be desirable as a goal, where all and everything is permitted, most especially depravity. And too often, public moral discourse is shaped by former students of elite colleges, with their half-remembered moral language from whatever decade they happened to have attended, but choked and clogged up by the necessary but inevitably draining fight to realize their own ambitions in the ever more precarious upper-middle-class world of corporate and non-profit alike. It should be more obvious that the collective moral language of a nation should not be taking the lead from someone’s fifteen-year-old memory of what some professional academic, with her own cares and ambitions, said about Foucault or Judith Butler, once, in 1996, at Vassar. Language wants to renew itself; the pitched battles of academic language provide imprimatur but not the necessary fertile ground.

One irony here is that, historically speaking, in the aftermath of World War II, a certain softer version of relativism – say, an insistence on the primacy of grey areas in any moral decision, or the ultimate moral ambiguity in all human stories and beings – has often been supposed, as conscious pedagogy, to teach moderation. (Once a colleague from the English department asked me how class went: “it was all relativism!,” I began; “oh good,” she cut in, “that’s so important!”) Often it appears to offer a carefully negotiated détente, manifested by but not limited to the young, for they have a strong sense that this is the maxim by which we all get along. “Well, that’s just your opinion” is a peacemaking statement to bring out when things get too heated. It’s an uncanny echo of precisely the sort of argument that was supposed to prevent the atrocities of World War II from ever taking place again: intolerance comes from a lack of openness, and openness is what relativism is supposed to secure.

But in fact this is precisely what relativism does not do. In the classroom, it means that any particular instinct you have about the rightness or wrongness of a situation is ultimately untrustworthy since it means you, a bad student, temporarily forgot that ultimately the answer is supposed to be ambiguous. You should have undercut the hero, you should have pointed out the way the villain was, at any rate, consistent in acting for his own interest. Any strong feelings you have about the situation are inevitably déclassé. (The more your education is supposed to be in the service of not being déclassé, the stronger this is felt.) If you happen to have accidentally made this false step, you ought to retreat and admit you stand alone in the isolation of what is “just your opinion.” On the other hand, at the same time, you have to also keep an eye out for previously demarcated evils that can be safely and mechanically denounced. This is what the word “problematic” is for: it draws a line around what’s bad, ostensibly in some ambiguous way, but really allows you to name it as bad simpliciter; you can feel good about knowing it’s bad, but as the remaining looseness in the term implies, not so bad you have to do anything about it.

A member of a white supremacist group at a white nationalist rally that turned violent resulting in one death and multiple injuries. Charlottesville, Virginia, August 12, 2017. Shutterstock

When the example falls out of the bounds of the previously demarcated bad things, the student flounders, and many absurdities will then transpire. For instance, a class on The Divine Comedy might find no one willing to admit that anyone deserves even so much as to be written into Dante’s hell – except Dante himself, for being so arrogant as to put anyone there. To my students, it’s perfectly possible to hold both of these positions simultaneously and feel that with this, they’re covering all their options: Dante is Hitler; no one should go to hell. Again, “all or nothing” is the principle of youthful ardor across the centuries per usual; and yet somehow, currently, these are still the only things we, the adults, have taught our students to do.

But the pedagogical problem most important to understand is that relativism is the worst possible thing we could be teaching the youth, because it is the hardest of all principles from which to rebel. And the sign of the truth of this is that once I was in a room with a group of very fine young people, and no one would say that the Nazis were bad.

If the tacit purpose and practical pressures of a college education have remained fundamentally the same, what changed? One answer lies in education before college. Somewhat infamously, Justin McBrayer, a professor of philosophy at Fort Lewis College, has argued that his own college students’ relativism can be traced in part to the Common Core, the nation-wide attempt at a K-12 curricular standard for public education, and the distinction it makes between what counts as fact and what remains opinion. What typically gets taught, from the very beginning of a student’s education, is that if “good” or “bad” are included in a statement, then such a statement could not be a fact, and therefore can have no claim to truth proper. McBrayer’s critics (including many teachers I respect) counter that the problem is with imperfect training in the standards and the lack of full implementation of the Core’s more complex distinctions. And yet by the time students show up to college, I and my colleagues see this strange uniformity of definition again and again.

The Core did not come up with this idea; that bit of troublemaking goes back at least to David Hume’s fact-value distinction of 1739. But as practiced, it does contain this distinction as a telling symptom within the whole, especially once picked up on by educational companies such as Pearson Realize, which make their money by doing the fairly complicated work of translating the Core’s standards into the most mundane of multiple-choice quizzes. And alarmingly, students from quite fancy private schools, even the “classical” charter schools, still meet me as twenty-year-olds with their faith in the uniform gray of the moral world, often with an extra helping of cynicism about what school is for (it’s for success) to boot. Once on Parents’ Day someone brought a third-grade sister to class, and she was able to produce the standard definition of “opinion” perfectly. During the pandemic, my son had a worksheet where he was asked to mark the sentence “it’s fun to play outside in summer” as pure opinion. And a quick poll of my Ethics class this fall showed that once again, every single student had the same definition memorized. Right now, the definition is ubiquitous.

In recent years I’ve started to assign McBrayer’s article to my students, in order to show that their faith in the divide between facts and opinions doesn’t come from an eternal unseen authority. But it turns out that the revelation that this distinction was bureaucratically constructed doesn’t really give most of them the walking terrors; and largely, many students have no problem with maintaining the distinction to be still fundamentally valid. Even when I bring up issues I know they care very much about, which outside of philosophy class they spend much time working on – unchecked police violence, deplorable prison conditions, homelessness – they still often feel obligated to maintain the acceptable position outside good and evil. In fact, more than once someone has dropped my class after I have spoken too strongly too soon against moral relativism. “Who decides?” a student once asked me again and again, with increasing emotion. What she meant was that no one should, with the emotional panic of faith interrupted.

Openness is what relativism is supposed to secure. But in fact this is precisely what relativism does not do. In the classroom, it means that any particular instinct you have about the rightness or wrongness of a situation is ultimately untrustworthy since it means you, a bad student, temporarily forgot that ultimately the answer is supposed to be ambiguous.

One student did write once after such a day’s classwork: “I’m shook.” This is a start. But the trouble is, once real relativism takes hold in someone’s heart, it is deeply unassailable by argument. Very, very few of those who start with a strong emotional commitment to relativism are ever fully persuaded. Socrates is famous for persuading the youthful Polemarchus that real justice involves harming no one at all; Polemarchus began, however, fervent in the belief that it’s just to help your friends out and actively harm your enemies. The reason Socrates can maneuver him so elegantly is that Polemarchus has a prior conviction that some things are good and others bad. Without an established habit of taking a stand on something with some regularity, a habit of making a judgment outside of the pre-judged, all the logic in the world won’t be able to tip you over into the conviction that making considered judgments is the right thing to do. The soul needs its angers and desires for living and not the least for justice; rational considerations are not enough.

Ultimately Mill is wrong about righteous indignation and our animal selves: anger contains the seeds of ethical direction, and if you catch it young and remind it to listen to reason, it can be worth listening to. Anger is capable of being educated, even the easily lampooned and quite forgivable hysterics of eighteen-year-olds. But a lack of anger is not: passionless relativism, therefore, is by far the greater problem right now than temporary passion for absolute morality.

Isolating the youth in their own opinions will not temper them; it will continue to produce the awkward duality of relativism and absolutism. The only thing for it is that students and the public at large must be asked to take up the painstaking work of thinking through the particulars of all the minor evils as well as the grand, the banal betrayals and the tediously small self-loves as they come within the everyday, not as world-changing crusade to abolish, but as daily bread to struggle through. This is the work that Aristotle places at the center of his ethical realm, where piece by piece we learn to judge as the just man judges. Most humans are really bad at this. The good news is that one could quite easily do rather more to recommend it.

Two decades after the artificial stasis of the 1990s I grew up in, the Nazis aren’t defeated anymore. They rose from the dead, and they’re not funny. Nazis are, to put it bluntly, bad, so much so as to be wicked indeed: Dante’s hell is empty, and all the devils are here.

But those of us shocked at their re-emergence are extremely rusty on how to talk about goodness and badness to one another. There are the cartoons of evil from books and movies, combined with the problem of presentism, that we’re tempted to believe in the inferiority of all previous civilizations to our own; and also there is the nihilism of the notion that perfectly decent people maintain the position that people of another race ought to be systematically annihilated, or that (racial) eugenics will solve poverty. In the Inferno, the people who never made up their mind one way or the other about the events of the day spend their time in hell envying even those in deeper eternal torment: because at least those guys have a reason to be there.

The opposite of relativism isn’t the moral clarity or perfect “objectivity” that such hollow morality promises; it can only ever be the daily struggle for better judgment next time, the careful parsing of our first reactions with close friends who don’t necessarily agree with us.

For adults who came of age when I did, or during the grim politesse of the Cold War, I can see why the snowballing viciousness of the youth when they call out something as bad these days is as inexplicable as it is terrifying, particularly when taken up by those who no longer can claim the excuse of adolescence. And I do worry daily over the eventual burn-out in these few painfully crusading youth in their painful crusade on all fronts, not to mention the adults who resemble them. I wish their desire had not been so thirsty before now, and I also wish they had examples of evil outside of the fantastical worlds of school-grade fiction. Leaving anger untrained for generations by attempting to subvert the soul’s desire for tyranny by unlimited moral ambiguity leaves a mark; it sets up generations ready to be moved by ressentiment in their first attempts towards moral thought, and possibly, to be ready for ever more mindless revenge.

Five years after Charlottesville, the idea that people would march on a college campus in public and vocal support of White supremacy is no longer shocking to me as it once was, as shocking as that thought ought to remain. The daily round of social evils and depravity of current events continues to distract me from the death of Heather Heyer; and from the beauty of remembering how religious leaders of different faiths marched out to place themselves in front of armed Nazi groups, arms linked. But it still seems to me that the common moral language has not caught up to events as they continue and continue to occur.

Absurdist humor, gallows humor, the sort of thing produced on Twitter’s better days, is one promising tool, because laughter at its best brings proportion and even hope. But we still lack a shared way of saying that “this is bad” that doesn’t come off as hopelessly moralistic, helplessly understated, or beside some utilitarian point. Worst of all, the most natural source of new shared moral language – the youth – have been hamstrung in their language by their education.

To spill ink over the moral relativism of the youth is now a distinct subgenre in American letters. As such, it runs the risk of hollow moral outrage, outrage in the service of bad faith at that. The opposite of relativism isn’t the moral clarity or perfect “objectivity” that such hollow morality promises; it can only ever be the daily struggle for better judgment next time, the careful parsing of our first reactions with close friends who don’t necessarily agree with us but are willing to continue to triangulate with us ever closer to the good.

But language matters, and ideas have consequences. The youth are far more alive than most of the rest of us to the existence of really-for-real-Nazis, the Nazis who spray swastikas on their dorm room doors, meme genocide online, and show up to the campus gate in arms. And with any luck, they are still just possibly paying attention to what we the adults say, how we say it, and what might be possible for our shared language, again – the better for them to say it better than we can.

And so I have to continue to say this, even though it sounds like too much and not enough: Bad things are bad, all in their own way and in their own respect. As Philippa Foot saw in 1945, if I can’t say this, I can’t pronounce “the Nazis are bad” in any meaningful sense. To say that badness is less than bad, or that goodness is less than true, is to undercut the moral heart of the world.

Already a subscriber? Sign in

Try 3 months of unlimited access. Start your FREE TRIAL today. Cancel anytime.

Richard Cuming

Another ‘promising tool’. It’s from the British sketch show, The Mitchell and Webb Show’. (Yes, like many British comedians they came out of an elite University, Cambridge...) Links are not permitted I note, but it’s easily findable by typing in ‘Mitchell and Webb, Nazi sketch’ and it comes up on YouTube.

John Ryan

The problem is Politics and Law faculties which are so much over representd on the media.

Al Owski

By not being able to say that Nazis are bad, that Nazism is morally bad, or for that matter any philosophy is better, indicates a depolarized moral compass. It points in no direction at all, leaving people adrift among competing ideas in a marketplace. What has been lost is the sense of the value of what is human. Nazism as an ideology has an end as Hannah Arendt says, “to eradicate the [very] concept of the human being.” Nazism's danger isn't that it is simply bad, but it is deceptive. It feigns moral neutrality and reason, but what it actually does is neutralize moral reasoning. It slips in, but the stage is set for it. Philosophies that dominate our Western thinking such as Hobbes (“the worth of a man, is his price”), Mill (utilitarianism), emphasis on “practical” curricula such as STEM to the exclusion of ethical training and the arts, that school is for learning how to be successful (implicitly zero-sum), all have led to this point. The collective memory of what Nazism led to in the 20th century has faded. Each generation needs to inoculate itself against this virus that takes nihilism and turns it into annihilation. I agree that “anger contains the seeds of ethical direction” but the soil must be prepared for ethics to grow from it. You are doing the hard work of tilling the hard-packed soil. Where ever we have influence with younger generations, we should be doing the same.

Alex Bensky

Excellent and thought provoking article, not marred by the semi-obligatory Trump slam. Trump in fact did call out in strong terms the actual neo-Nazis and white supremacists.

Mw

The awkward duality of relativism and absolutism is the best definition of reality.

Lawrence

For Ms Townsend. 1. Only God is good. 2. Quote from Laurens van der Post : "...became like a wild animal and set a bad example for animals. 3. Treat people the way you would have them treat you (which means ignore/stay clear of dangerous people).

Ryan

Excellent article, thank you!

Tom Cathcart

Your article is almost too subtle for my 82-year-old brain, but I got enough of it to see that your students are lucky to have you. I’m sure the less-motivated students find your course too taxing, but keep up the good fight!